CI#

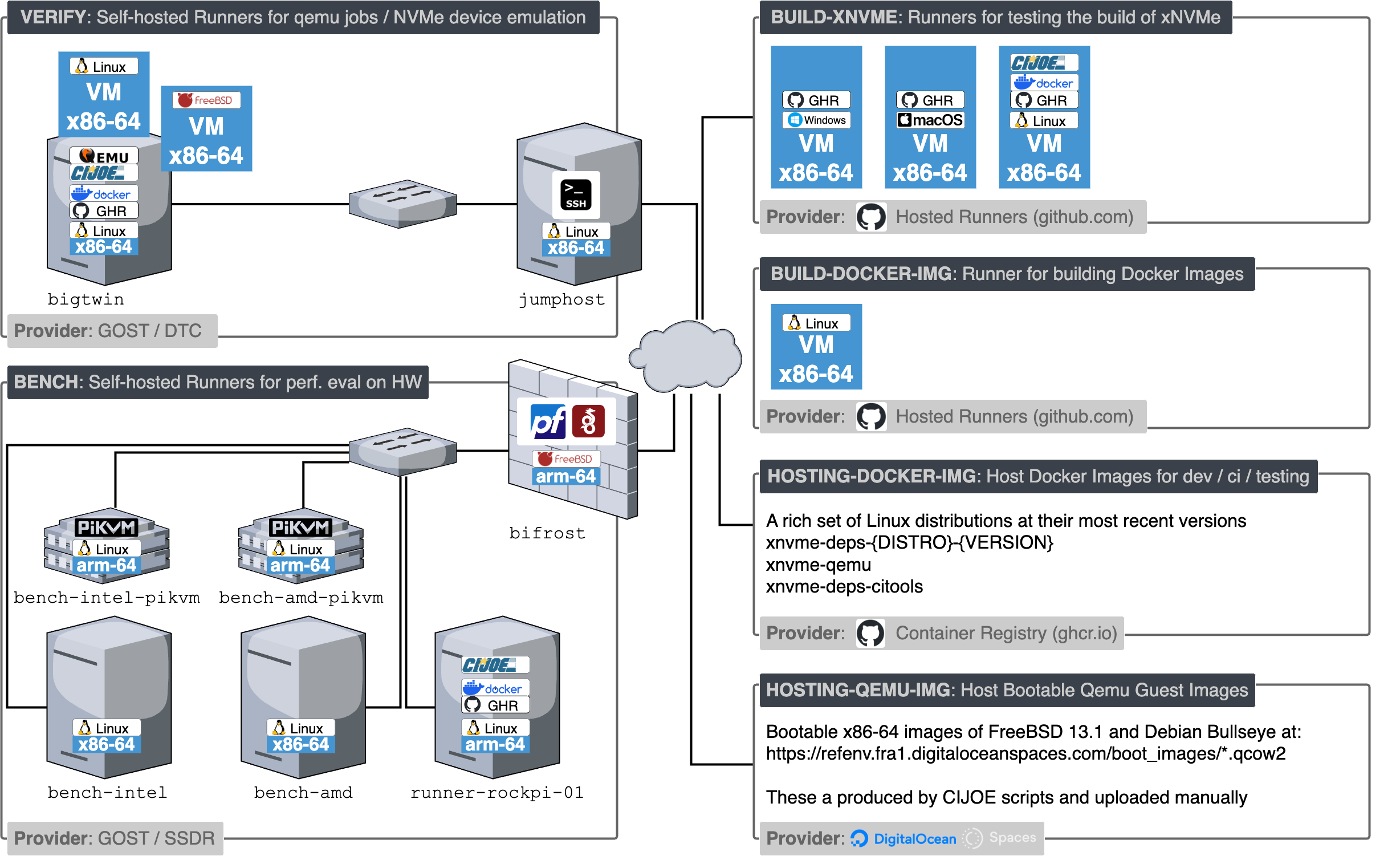

An overview of the environments and the virtual, and physical resources utilized for the xNVMe CI is illustrated below.

xNVMe CI environments and resources#

The above figure is outdated, as bench and verify have been migrated to a MaaS provider and are no longer on-premises. However, one system remains on-prem because no MaaS provider currently offers a suitable replacement.

Infrastructure#

The main logical infrastructure component for the xNVMe CI is GitHub Actions (GHA). GHA handles events occuring on the following repositories:

And decides what to execute and where. In other words GHA is utilized as a resource-scheduler and pipeline-engine. The executor role is delegated to CIJOE for details, then have a look at CIJOE in xNVMe.

The motivation for this separation is to make it simpler to reproduce build, test, and verfication issues occuring during a CI run, using locally available resources, by executing the CIJOE in xNVMe workflows and scripts.

Jobs#

The jobs performed by the xNVMe CI catch the following issues during integration of changes / contributions:

Code format issues

Linting and code-formating

clang-format for C

clippy for Rust

black / ruff for Python

Build issues

Build with debug enabled and disabled

Detect linking issues with both the static and shared library

Tested on every OS listed in the Toolchain section

Functional regressions

Running logical tests exercising all code-paths

Using a naive “ramdisk” backend

Using emulated NVMe devices via qemu

Using physical machines

In addition to cathing issues, then the CI is also utilized for:

Benchmarking of xNVMe

Using physical machines

Measure peak IOPS for a single physical CPU core

Specifically for the integration of xNVMe in SPDK (

bdev_xnvme)

Statically Analyze the C code-base

CodeQL via GitHub

Coverity

Produce and deploy documentation

Run all example commands (

.cmdfiles) and collect their output in.outfilesRender the Sphinx-doc documentation as HTML

Upload rendered documentation to xnvme.io via GitHub-pages

These following sections provide system-setup notes and other details for the various CI jobs.